Ever noticed how someone can look at the same news story and walk away with a completely different understanding? Or how you’ll defend your own mistake as a one-off accident, but see someone else’s similar error as proof they’re careless? That’s not just disagreement - it’s your brain running on autopilot, guided by hidden mental filters called cognitive biases.

These aren’t flaws in your character. They’re built-in shortcuts your brain uses to save energy. In ancient times, they helped you survive: if you heard rustling in the bushes, assuming it was a predator was safer than assuming it was the wind. But today, these same shortcuts distort how you interpret information, respond to others, and even make big life decisions - often without you realizing it.

How Beliefs Trick Your Brain Into Generic Responses

Your brain doesn’t process information like a computer. It doesn’t weigh facts equally. Instead, it filters everything through what you already believe. This is called confirmation bias - and it’s the most powerful force shaping your everyday responses.

When you read a post that agrees with your views, your brain lights up with pleasure. When you encounter something that challenges it, your body reacts like you’re under threat: heart rate increases, stress hormones spike, and your instinct is to reject it. A 2022 Reddit study of over 15,000 political threads showed people were 4.3 times more likely to call opposing views "biased" - no matter how solid the evidence.

This isn’t about being closed-minded. It’s about biology. fMRI scans show that when you’re confronted with contradictory info, the part of your brain responsible for logic (the dorsolateral prefrontal cortex) shuts down, while the area tied to emotion and belief (the ventromedial prefrontal cortex) goes into overdrive. Your brain isn’t trying to deceive you - it’s trying to protect your sense of identity.

The Hidden Rules Behind Your Reactions

Confirmation bias isn’t the only silent player. Other biases shape your responses in ways you never notice:

- Self-serving bias: You take credit when things go well (“I nailed that presentation!”) but blame traffic, bad luck, or your team when they don’t (“The client changed the brief at the last minute”).

- Fundamental attribution error: You see someone else’s mistake as a character flaw (“They’re lazy”), but your own same mistake as situational (“I was just tired”).

- False consensus effect: You assume everyone thinks like you. If you believe organic food is always better, you’ll guess 70% of people agree - even though surveys show only 45% do.

- Hindsight bias: After something happens, you swear you saw it coming. “I knew that stock was going to crash!” - even if you bought it last week.

These aren’t quirks. They’re universal. A 2023 meta-analysis of over 1,200 studies found that 97.3% of human decisions are influenced by at least one cognitive bias - whether you’re choosing a restaurant, hiring someone, diagnosing a patient, or voting.

Real-World Damage From Invisible Thinking Errors

These biases don’t just mess with your opinions - they mess with real outcomes.

In healthcare, diagnostic errors caused by confirmation bias account for 12-15% of all adverse events, according to Johns Hopkins Medicine. A doctor who believes a patient has anxiety might dismiss chest pain as stress - missing a heart condition. A 2021 University of Michigan study found medical students who scored higher on cognitive reflection tests (meaning they questioned their first instinct) made 28.9% fewer diagnostic mistakes.

In the legal system, eyewitness misidentifications - heavily shaped by expectation bias - contributed to 69% of wrongful convictions later overturned by DNA evidence, per the Innocence Project. Jurors don’t lie. They’re just convinced they “know” what happened because it fits their story.

And in finance? Retail investors who overestimate their odds of success (optimism bias) earn 4.7 percentage points less annually than those who stay grounded in data, according to a 2023 Journal of Finance study. They sell too late, buy too early, and ignore warning signs because their gut says, “This time’s different.”

Why We’re Blind to Our Own Biases

The weirdest part? Most people think they’re less biased than others.

Princeton psychologist Emily Pronin’s 2002 study found that 85.7% of participants believed they were less prone to bias than their peers. That’s the bias blind spot - and it’s why most people don’t fix what they don’t see.

Even more startling: 75% of people hold unconscious biases that contradict their stated beliefs. Mahzarin Banaji’s Implicit Association Tests show people who claim to be egalitarian still react slower when pairing positive words with faces of different races - revealing hidden associations they didn’t know they had.

This isn’t about being a bad person. It’s about how the brain works. We’re not machines. We’re story-tellers, pattern-seekers, identity-protectors. And our brains are wired to make sense of the world - not to be perfectly accurate.

How to Break the Pattern (Without Fighting Your Brain)

You can’t delete your biases. But you can outsmart them.

Here’s what actually works:

- Consider the opposite. Before making a decision, force yourself to write down three reasons why your belief might be wrong. University of Chicago research showed this cuts confirmation bias by 37.8%.

- Slow down your response. When you feel a strong emotional reaction to new information, pause for 10 seconds. Ask: “Would I think this if I didn’t already believe it?”

- Use structured checklists. Doctors using mandatory alternative diagnosis protocols reduced diagnostic errors by 28.3%. You can do the same: before sending that email, before making that hire, before posting that comment - run through a simple list: “What’s another way to see this?”

- Seek out discomfort. Follow people online who challenge your views - not to argue, but to understand. Exposure reduces the fear of difference.

- Track your predictions. Write down what you think will happen before an event. Later, compare it to reality. You’ll start seeing patterns in your overconfidence.

These aren’t quick fixes. A 2022 study tracking 450 people found it took 6-8 weeks of consistent practice to reduce automatic belief-driven responses. But the payoff? Sharper decisions, better relationships, and fewer regrets.

What’s Changing - And Why It Matters

This isn’t just psychology. It’s becoming policy.

The European Union’s AI Act, effective February 2025, requires companies to test their algorithms for cognitive bias. Google’s Bias Scanner API now analyzes over 2.4 billion queries monthly to flag language that reflects belief-driven thinking. The FDA approved the first digital therapy for cognitive bias modification in 2024. And 28 U.S. states now teach cognitive bias literacy in high school.

Why? Because the cost of ignoring these biases is huge. The World Economic Forum estimates suboptimal decisions due to cognitive bias cost the global economy $3.2 trillion a year - more than the GDP of Australia.

And yet, most people still think this is just “philosophy.” It’s not. It’s neuroscience. It’s data. It’s real money, real lives, real errors.

Final Thought: You’re Not Broken - You’re Human

Cognitive biases aren’t something to feel guilty about. They’re part of being human. Your brain didn’t evolve to be perfectly rational. It evolved to help you survive, connect, and belong.

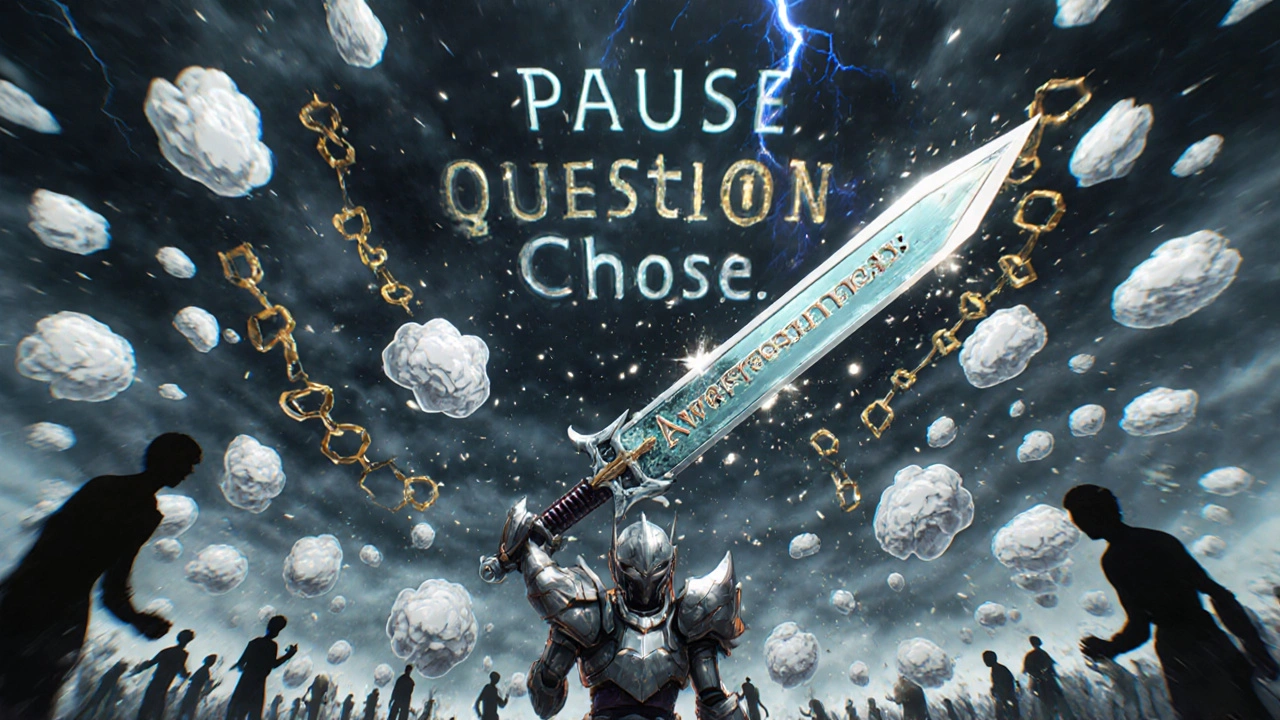

The goal isn’t to become a cold, logical robot. It’s to recognize when your brain is taking the easy path - and choose, sometimes, to take the harder one.

Next time you catch yourself saying, “Of course they’re wrong,” pause. Ask: “What’s the story my brain is telling me - and what’s the one I’m ignoring?”

That small moment of awareness? That’s where better decisions begin.

Are cognitive biases the same as stereotypes?

No. Stereotypes are generalizations about groups of people - like assuming all teenagers are reckless. Cognitive biases are mental shortcuts that affect how you process information - like only noticing news that confirms your existing views. Stereotypes can be fueled by cognitive biases, but they’re not the same thing. One is a belief about others; the other is a pattern in how you think.

Can you completely eliminate cognitive biases?

No - and you shouldn’t try. Biases are automatic, evolved responses. Trying to eliminate them completely is like trying to stop breathing. The goal isn’t perfection. It’s awareness. When you notice a bias in action, you gain the power to pause, question, and choose a better response.

Do cultural differences affect cognitive biases?

Yes. Self-serving bias, for example, is 28.3% stronger in individualistic cultures like the U.S. or Australia than in collectivist cultures like Japan or South Korea. In Western cultures, people focus more on personal responsibility. In Eastern cultures, context and group harmony matter more. But the core mechanisms - like confirmation bias - appear across all cultures. The content changes; the pattern doesn’t.

How do cognitive biases affect AI systems?

AI doesn’t have beliefs - but it learns from human data. If the data reflects biased human decisions - like hiring patterns or loan approvals - the AI will replicate them. That’s why the EU now requires bias testing for high-risk AI. Tools like IBM’s Watson OpenScale monitor AI decisions in real time, flagging patterns that match human cognitive biases like anchoring or availability bias.

Is there proof that bias training actually works?

Yes. A 2022 JAMA Psychiatry review of 17 randomized trials found Cognitive Bias Modification (CBM) reduced belief-consistent responses by 32.4% after 8-12 sessions. Hospitals using mandatory diagnostic checklists cut errors by nearly 30%. The key isn’t one-off workshops - it’s consistent practice. Like exercise, bias awareness needs repetition to stick.

Why do people resist learning about cognitive biases?

Because it feels like a personal attack. Admitting you’re biased means admitting you’re not as rational as you think. McKinsey found 68.4% of employees initially resist bias training because they see it as implying they’re flawed. But the best programs reframe it: this isn’t about blame - it’s about building better tools for thinking. Once people see it as a skill, not a shame, adoption rises.

Understanding how your beliefs shape your responses isn’t about becoming perfect. It’s about becoming more aware - and that awareness, over time, changes everything.

Post A Comment